Alibaba Releases QwQ-32B Reasoning Model: 20x Smaller But Equally Powerful As DeepSeek R-1

This new open-source model is capable as DeepSeek R-1 but somehow 20 times smaller.

It’s only been two months since DeepSeek R-1 was released to the public. I was really excited because finally, the AI community had an open-source model that could stand toe-to-toe with OpenAI’s impressive o1 model.

However yesterday, Alibaba released another open-source model that’s just as capable as DeepSeek R-1 but somehow 20 times smaller.

The Chinese tech giant’s new reasoning model, QwQ-32B, operates with 32 billion parameters compared to DeepSeek’s 671 billion parameters with 37 billion parameters actively engaged during inference.

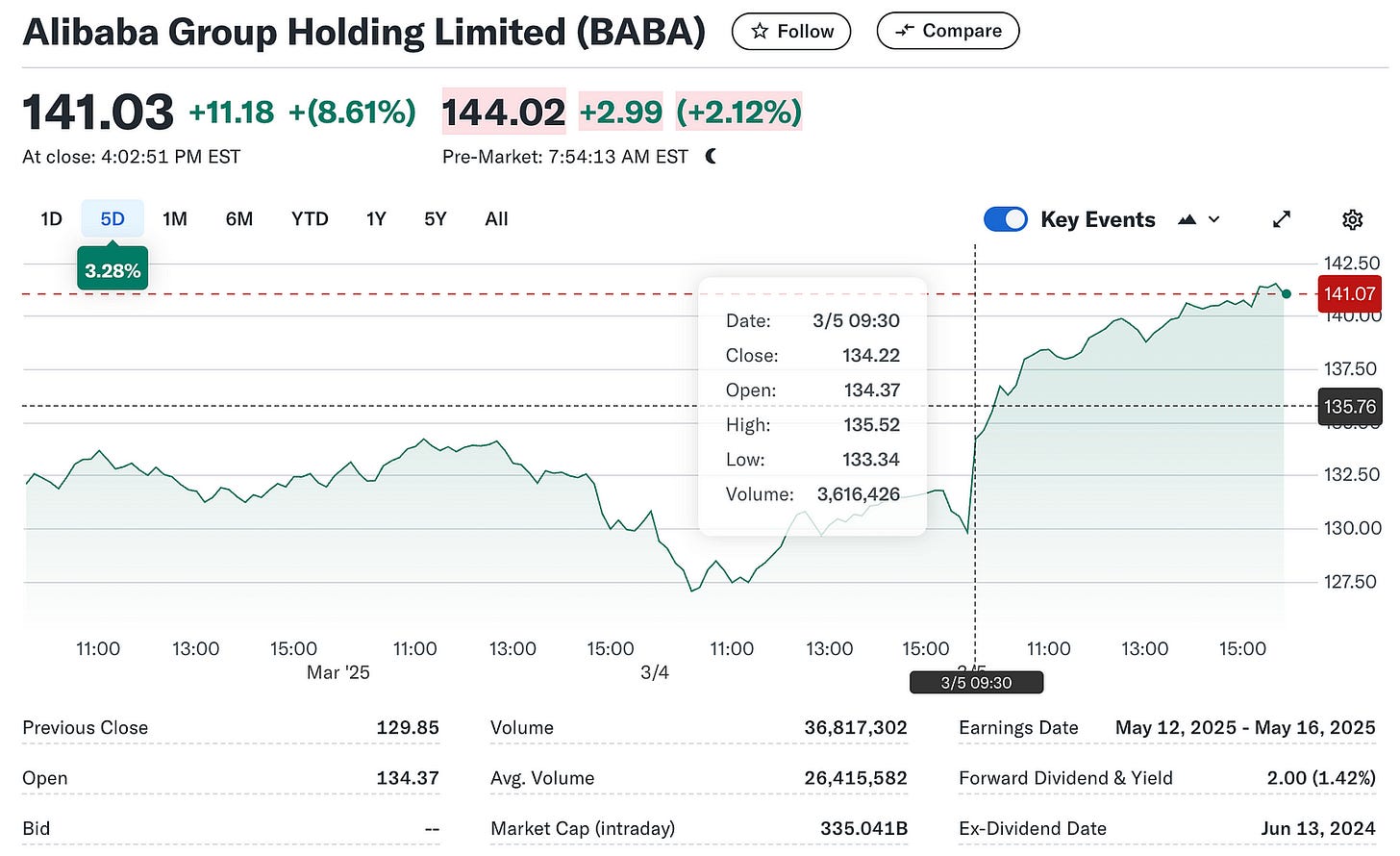

Alibaba has significantly increased its investment in AI, starting with its first large language model in 2023. The company’s Cloud Intelligence unit has become a major growth driver, contributing notably to Alibaba’s profit increase in the December quarter.

“Looking ahead, revenue growth at Cloud Intelligence Group driven by AI will continue to accelerate,” Alibaba CEO Eddie Wu recently said. This optimism around AI’s potential has resonated well with investors, causing Alibaba’s stock price to surge noticeably when QwQ-32B was unveiled.

Key Features of QwQ-32B

The QwQ-32B model uses reinforcement learning (RL), a method where the model learns through trial and error rather than traditional supervised training. The significant advantage here is that it requires far fewer resources, with only 32 billion parameters compared to DeepSeek-R1’s 671 billion parameters (of which about 37 billion are actively used).

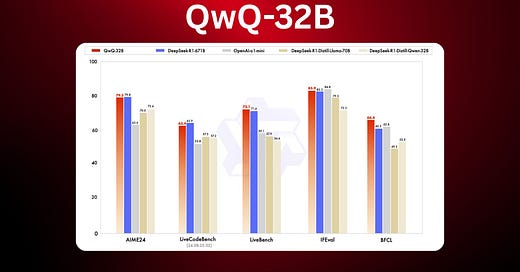

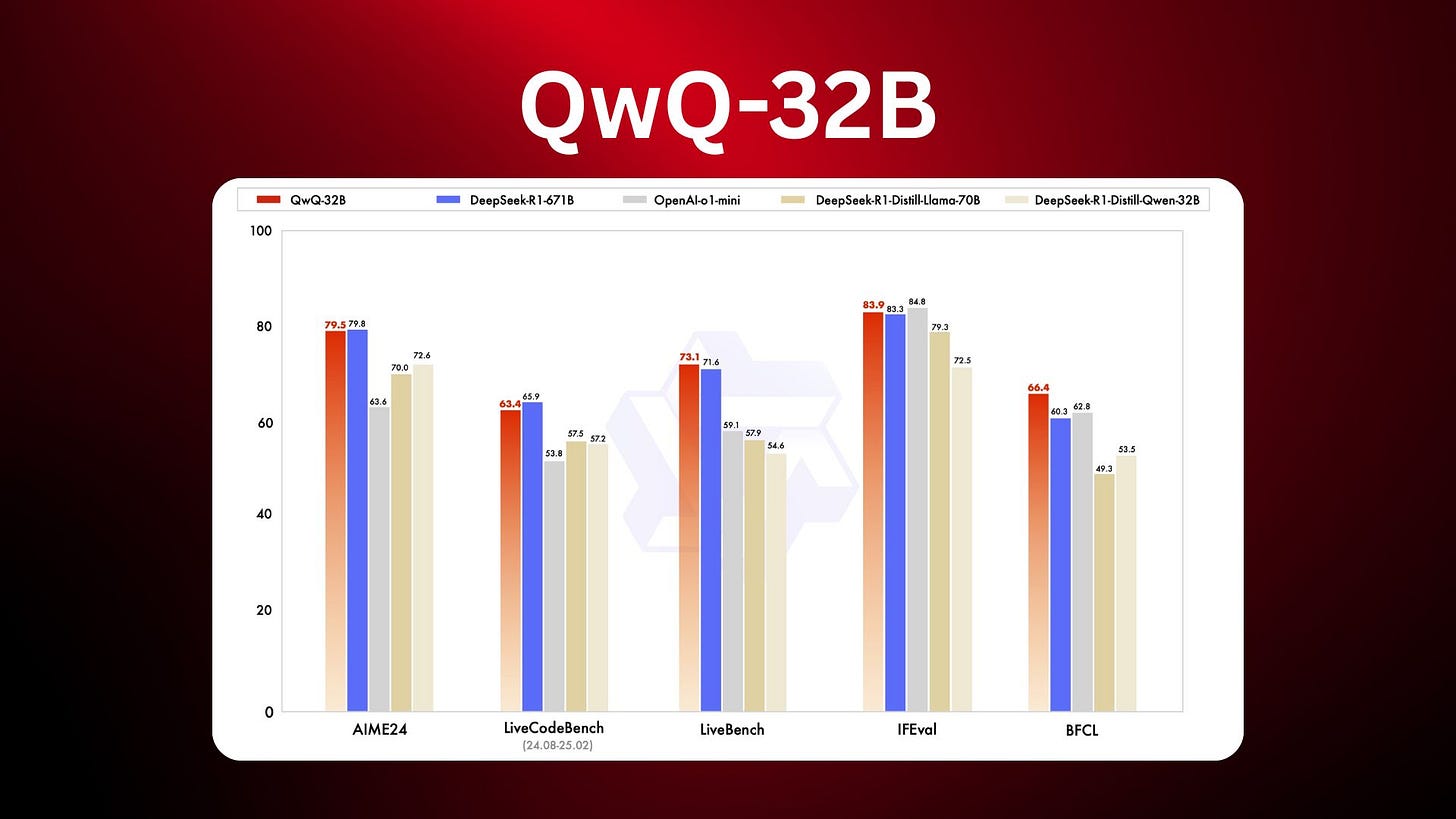

Despite this smaller size, QwQ-32B delivers performance on par with, or even slightly better than, larger models in certain tasks.

Here’s a summary of its key features: