ByteDance Introduces LatentSync: An Open-Source Lip Sync AI Model

ByteDance just released LatentSync, a new state-of-the-art and open-source model for video lip sync. Here's how you can use it for free.

ByteDance recently introduced LatentSync, which is a new state-of-the-art and open-source model for video lip sync. It’s an end-to-end lip sync framework based on audio-conditioned latent diffusion models.

That’s a bit mouthful, but what it means is that you can upload a video of someone speaking and an audio file you want to use instead of the original. The AI then overlays the new audio and adjusts the speaker’s lip movements to perfectly match the uploaded audio.

The result is a remarkably convincing, albeit slightly uncanny, deepfake video.

I’m honestly amazed by how fast things have changed in this area. Just a year ago, lip syncing in AI videos felt off, with the mouth movements often looking creepy. Now, with LatentSync, we’re stepping into a new era of easy and convincing deepfake-like videos.

Check out an example below:

If you’re one who keeps an eye on the latest advancements in AI technology, videos with lipsynced audio aren’t new. In fact, I have written articles about them months ago, but one thing they all have in common is the quality of the result. A lot of tools struggled to make the mouth match the spoken words exactly. On top of that, the area around the lips could look off, so the face sometimes seemed unnatural.

This effect is related to the “uncanny valley,” where something looks almost human but still gives you a slight feeling that something’s not quite right. Personally, those older videos always reminded me of low-budget special effects in some indie sci-fi movie.

How LatentSync Works

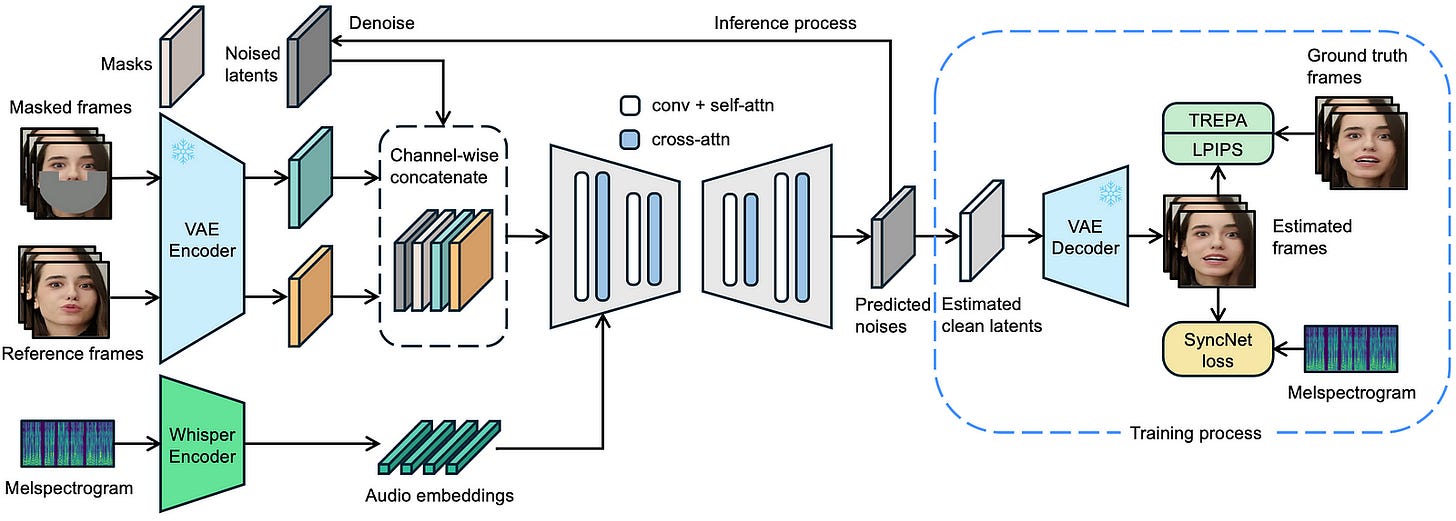

The LatentSync framework uses Stable Diffusion to model complex audio-visual correlations directly. However, diffusion-based lip-sync methods often lack temporal consistency due to variations in the diffusion process across frames.

To address this, researchers introduce Temporal REPresentation Alignment (TREPA), which improves temporal consistency while maintaining lip-sync accuracy. TREPA aligns generated frames with ground truth frames using temporal representations from large-scale self-supervised video models.

LatentSync uses Whisper to turn melspectrograms into audio embeddings, which are added to the U-Net through cross-attention layers. Reference and masked frames are combined with noised latents as the U-Net’s input.

During training, researchers estimate clean latents from predicted noise in one step and decode them to get clean frames. TREPA, LPIPS, and SyncNet losses are applied in the pixel space.

If you want to learn more about the technical details of LatentSync, check out the whitepaper here.

How To Do AI Lip Sync

Since the model is open-source, an API is already available on Replicate and Fal.

In Replicate, look for ByteDance’s LatentSync model in the explore page, and in the Playground tab, upload your reference video and audio file.

Keep reading with a 7-day free trial

Subscribe to Generative AI Publication to keep reading this post and get 7 days of free access to the full post archives.