Here's How I Added Gemini 2.0 Flash's Native Image Editing Support To My Web App

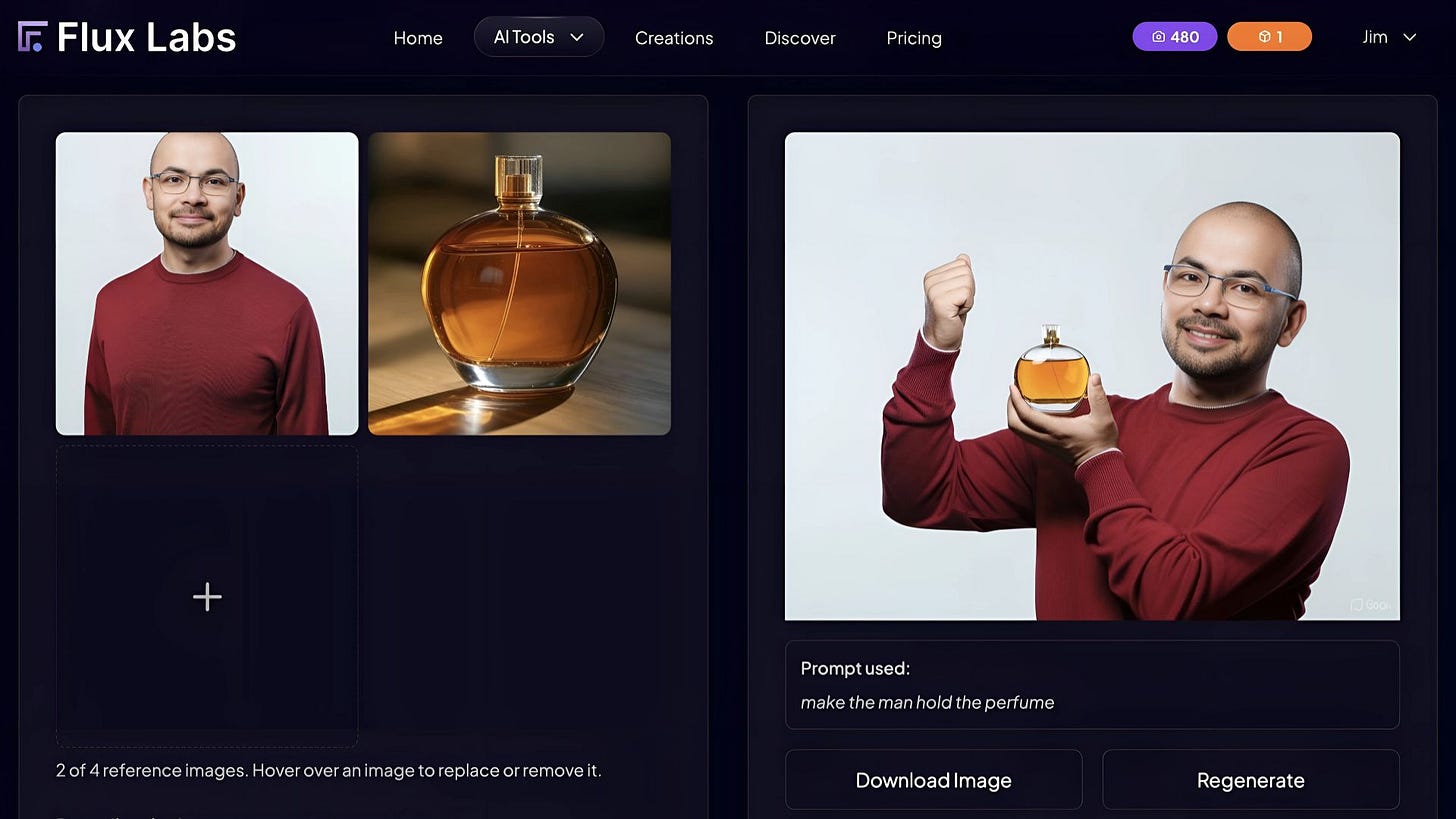

Google's new Gemini 2.0 Flash with image editing capability lets you edit images with natural language.

Google released Gemini 2.0 Flash with native image editing capabilities, and it’s one of the most groundbreaking models ever released by Google this year. I mean, I’m a little biased because I have been deeply interested in image models since I launched my web app for AI images.

The tech giant is known to be bad at their API documentation, so I was struggling to integrate Gemini 2.0 Flash into Flux Labs these past few days. Thankfully, Logan Kilpatrick, lead product engineer for Google AI Studio, recently published updated documentation that’s significantly easier to follow.

So, if you’re planning to build apps from scratch or integrate Gemini 2.0 Flash into your existing web app, let me show you exactly how to get it done.

What’s new in Gemini 2.0 Flash?

Google’s Gemini 2.0 Flash now supports native image editing through natural language prompts. Unlike previous multimodal systems that relied on separate models (e.g., combining a language model with Imagen 3 for image generation), Gemini 2.0 Flash processes both text and images within one unified system.

This unified approach removes the need for inter-model communication and significantly reduces latency. Additionally, it now supports embedding longer text directly onto images.

If you haven’t tested Gemini 2.0’s new image editing capabilities yet, I highly recommend experimenting with it first on Google’s AI Studio before creating a wrapper app or integrating it into your existing web application.

Here’s a quick look at the AI Studio. Make sure to select the model “Gemini 2.0 Flash Experimental” and set the output format to “Images and text.”

Take the time to fully appreciate the brand-new features. Once you’re comfortable, let’s dive into the step-by-step integration via API.

Build the user interface first

Keep reading with a 7-day free trial

Subscribe to Generative AI Publication to keep reading this post and get 7 days of free access to the full post archives.