Meta Releases Llama-4 AI Models

Llama 4 looks good on paper. But just hours after launch, Llama 4 has been surrounded by controversy.

Meta has just released Llama 4, a new family of natively multimodal AI models with a 10 million context window — a significant difference compared to the previous leading 2 million token window of Google’s Gemini model. Meta representatives are even calling it a “nearly infinite” context length.

Llama 4 is now powering the latest Meta AI assistant across the web, including WhatsApp, Messenger, and Instagram in 40 countries.

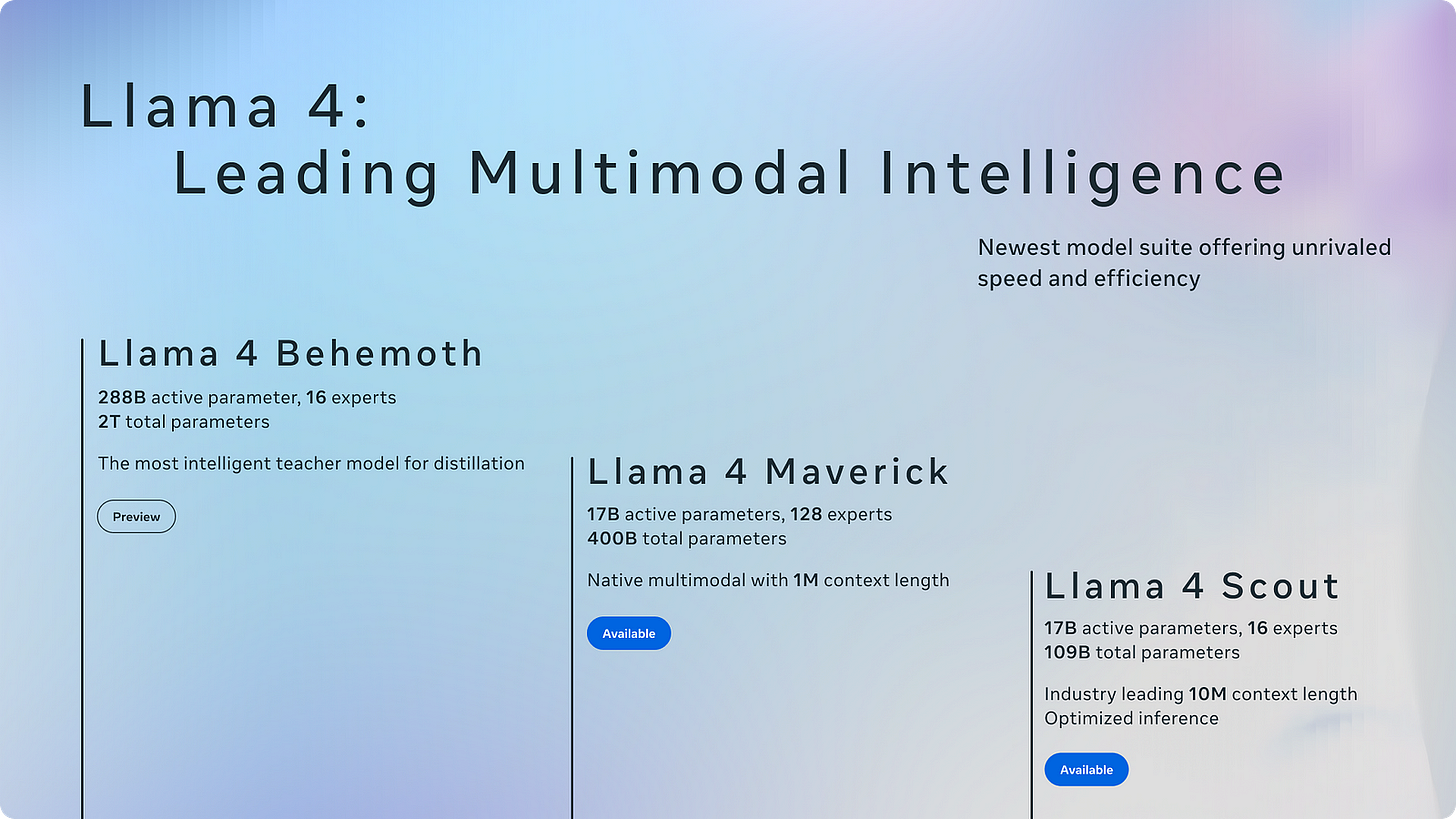

There are three new models in total:

Llama 4 Scout: This is the “smallest” with 109 billion total parameters and 17 billion active parameters, utilizing 16 experts. This is the model with 10 million token context window.

Llama 4 Maverick: This model has 400 billion total parameters with 17 billion active parameters and 128 experts. It features a one million token context length, which is expected to increase.

Llama 4 Behemoth: This is a massive model with 2 trillion total parameters and 288 billion active parameters with only 16 experts. It is not yet released (“still baking”) but is described as a frontier model on par with the size of models from Claude and OpenAI.

All three announced Llama 4 models are natively multimodal, meaning they can process and understand text, images, and video.

Llama 4’s technical details

Keep reading with a 7-day free trial

Subscribe to Generative AI Publication to keep reading this post and get 7 days of free access to the full post archives.