Save $200 With This Open-Source Alternative to ChatGPT's Deep Research

Here's a step-by-step guide on how to setup and use the Open Deep Research project on your local system.

OpenAI’s Deep Research is incredibly powerful. It has a new agentic capability that conducts multi-step research on the internet for complex tasks that can do the work of an analyst or researcher in minutes instead of hours or days.

Unfortunately, it is only available on the $200 per month Pro plan. This makes sense if you’re a research analyst at a hedge fund, a PhD student, or in some highly technical field that requires a lot of research work.

However, if you’re a blogger, marketer, or content creator of any sort looking to create well-researched content, you probably don’t need the level of depth that Deep Research goes into.

Luckily, there are open-source alternatives out there. One of the most popular right now is called Open Deep Research.

What is Open Deep Research?

An AI-powered research assistant that leverages search engines, web scraping, and large language models to perform iterative, in-depth research on any topic. It was created by David Zhang, the co-founder and CEO of Aomni.

This project is basically an open-source alternative to OpenAI’s Deep Research feature, which is only accessible to users who are willing to pay $200 per month for the Pro plan.

This repository aims to offer a minimal implementation of a deep research agent—one that can refine its research focus over time and dive deeply into subjects. They’ve intentionally kept the codebase under 500 lines to ensure it’s both easy to understand and extend.

You can find the source code of the project in this GitHub repository:

The project is built with OpenAI’s AI SDK and Firecrawl written in Typescript.

How Open Deep Research Works

Internally, the AI agent will take the user input, break it down into different sub-research threads that it’ll run in parallel, and recursively iterate based on new learnings, spawn new research threads, and collect new knowledge until it reaches the necessary breadth and depth.

Here is the flowchart of what happens behind the scenes.

Step #1: Initial Setup

Takes user query and research parameters (breadth & depth)

Generates follow-up questions to understand research needs better

Step #2: Deep Research Process

Generates multiple SERP queries based on research goals

Processes search results to extract key learnings

Generates follow-up research directions

Step #3: Recursive Exploration

If depth > 0, takes new research directions and continues exploration

Each iteration builds on previous learnings

Maintains context of research goals and findings

Step #4: Report Generation

Compiles all findings into a comprehensive markdown report

Includes all sources and references

Organizes information in a clear, readable format

Key Features of Open Deep Research

Iterative Research: Performs deep research by iteratively generating search queries, processing results, and diving deeper based on findings

Intelligent Query Generation: Uses LLMs to generate targeted search queries based on research goals and previous findings

Depth & Breadth Control: Configurable parameters to control how wide (breadth) and deep (depth) the research goes

Smart Follow-up: Generates follow-up questions to better understand research needs

Comprehensive Reports: Produces detailed markdown reports with findings and sources

Concurrent Processing: Handles multiple searches and result processing in parallel for efficiency

Setting Up the Open Deep Research Project

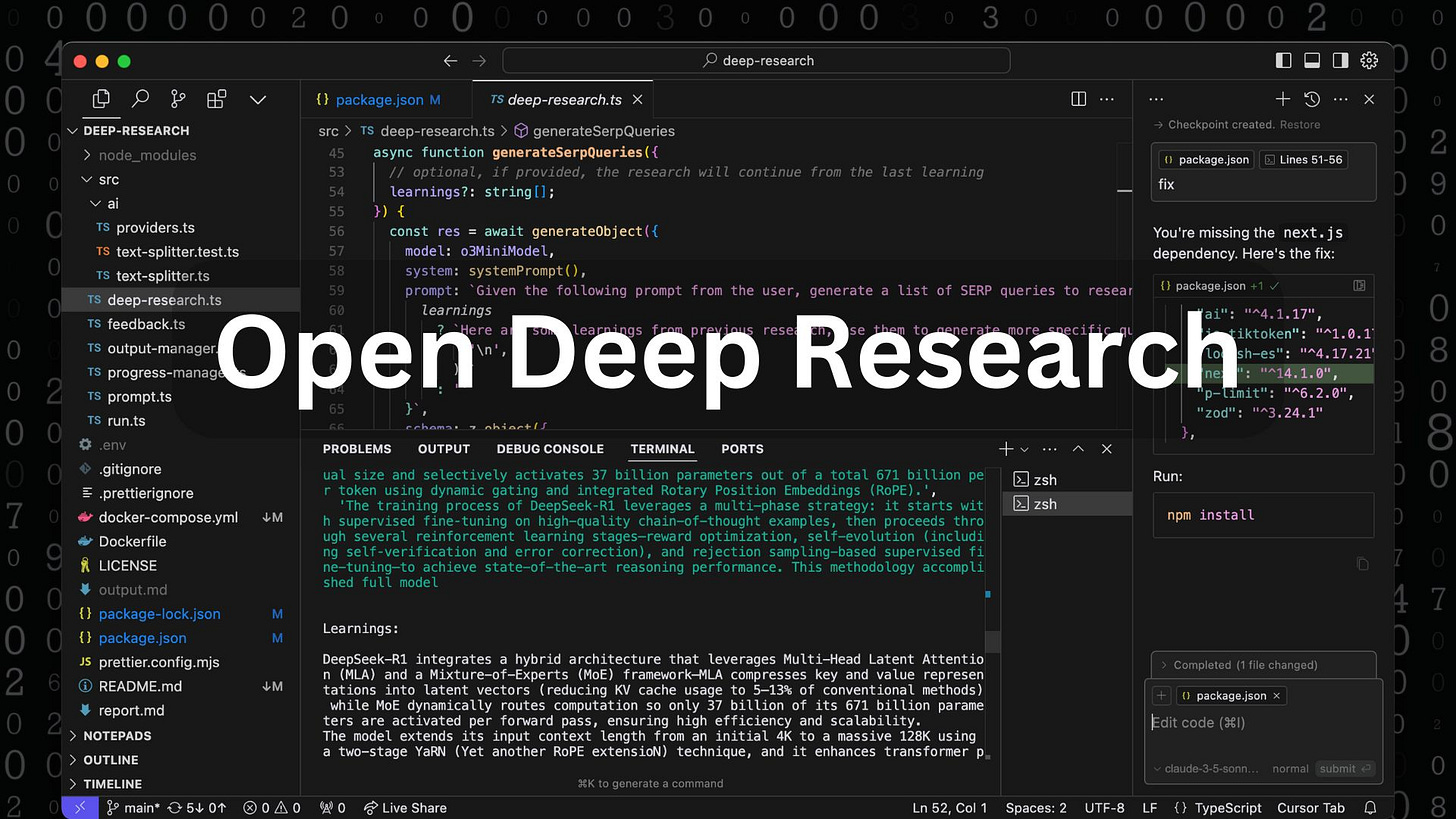

Go to the GitHub page and clone the project to your local disk. Open the project in VS Code and run the command npm install to install the dependencies.

Keep reading with a 7-day free trial

Subscribe to Generative AI Publication to keep reading this post and get 7 days of free access to the full post archives.