Stability AI just announced Stable Fast 3D (SF3D), a new product that can generate textured UV-unwrapped 3D assets from an image in under a second. This is incredibly fast because the last AI 3D generators I tried, like Meta AI’s 3D Gen (text-to-3D) or Unique3D (image-to-3D) from Tsinghua University, render 3D assets in 50–60 seconds.

In this article, we’ll look at what this new Stable Fast 3D generator is, how it works, and how you can try it out.

What is Stable Fast 3D?

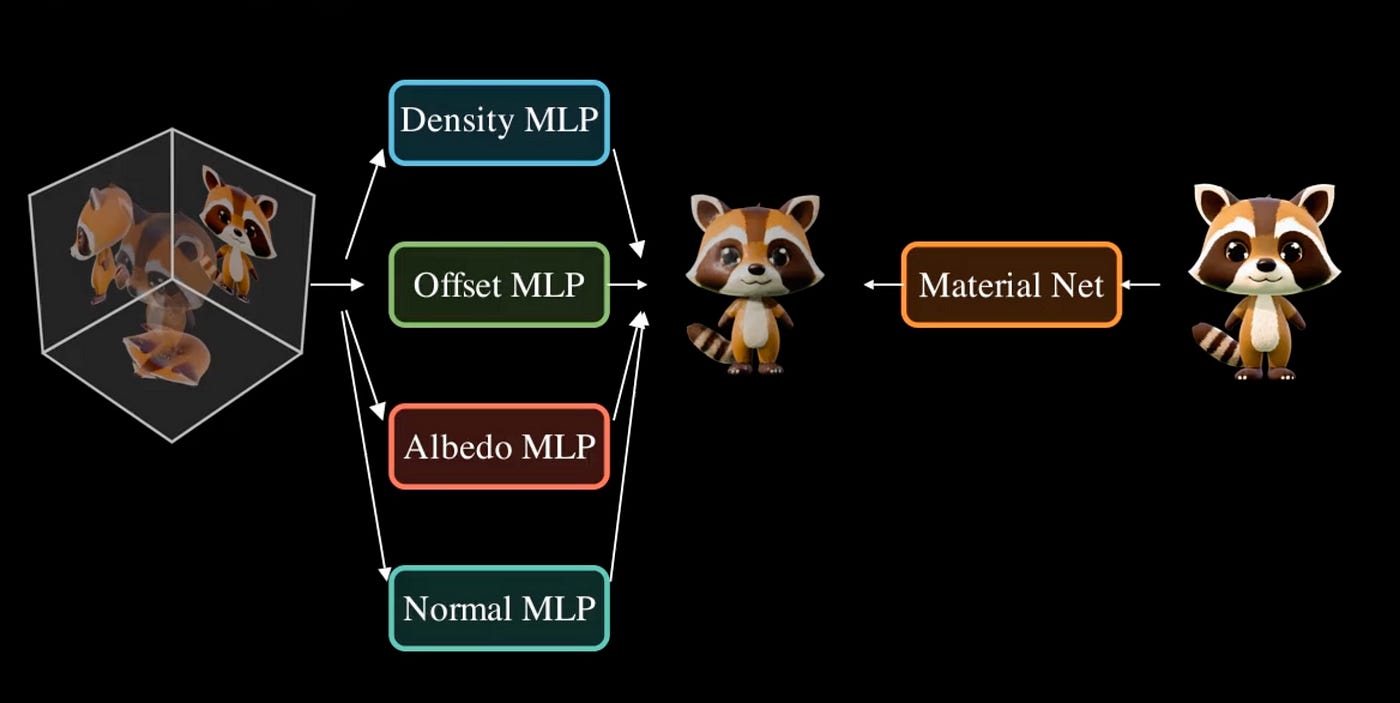

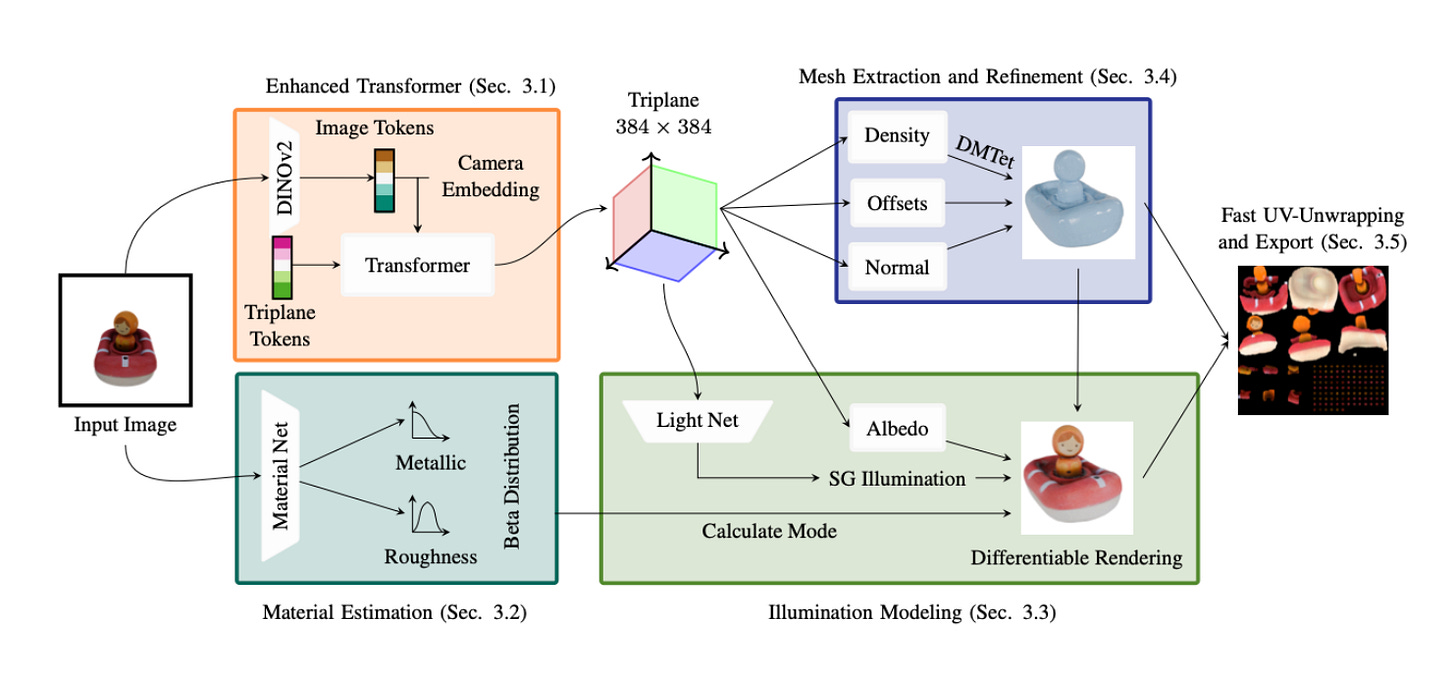

Stable Fast 3D (SF3D) is a new method for rapid and high-quality textured object mesh reconstruction from a single image in just 0.5 seconds.

Unlike most existing approaches, SF3D is explicitly trained for mesh generation and incorporates a fast UV unwrapping technique that enables swift texture generation, avoiding reliance on vertex colors. This method also predicts material parameters and normal maps to enhance the visual quality of the reconstructed 3D meshes.

Additionally, SF3D integrates a delighting step to effectively remove low-frequency illumination effects, ensuring that the reconstructed meshes can be easily used in various lighting conditions.

If you want to know more details about Stable Fast 3D, check out the white paper here.

How It Works

You start by uploading a single image of an object. Stable Fast 3D then rapidly generates a complete 3D asset, including:

UV unwrapped mesh

Material parameters

Albedo colors with reduced illumination bake-in

Optional quad or triangle remeshing (adding only 100–200ms to processing time)

To generate a 3D object using SF3D, the process begins with an input image processed through a DINO v2 encoder, which generates image tokens representing the features of the image.

Keep reading with a 7-day free trial

Subscribe to Generative AI Publication to keep reading this post and get 7 days of free access to the full post archives.